When it comes to improving the development cycle or improving the portability and usability of your application products, businesses have a few different options, including going serverless or working with containers. Each method comes with unique pros and cons and key considerations for use cases. We’ll discuss the difference between serverless vs. containers, benefits and challenges, and potential areas where each, or both, can be of use.

What is Serverless Computing and How Does it Work?

Serverless computing, aka “serverless”, is an approach to building and running applications without having to manage the underlying infrastructure, such as virtual machines or servers. While serverless computing doesn’t mean there are literally no servers involved, it abstracts away the need for developers to manage them directly. How? A cloud service provider handles the provisioning and scaling of the computing resources needed to run the application code. The provider also maintains and scales the servers behind the scenes, allowing developers to focus on writing the application code rather than worrying about infrastructure.

For serverless computing to work, you simply write your application code, package it as functions or services, and the cloud provider takes care of executing that code only when it is triggered by an event or request. For example, an incoming email may trigger a task to log the email somewhere, which is executed via the serverless platform.

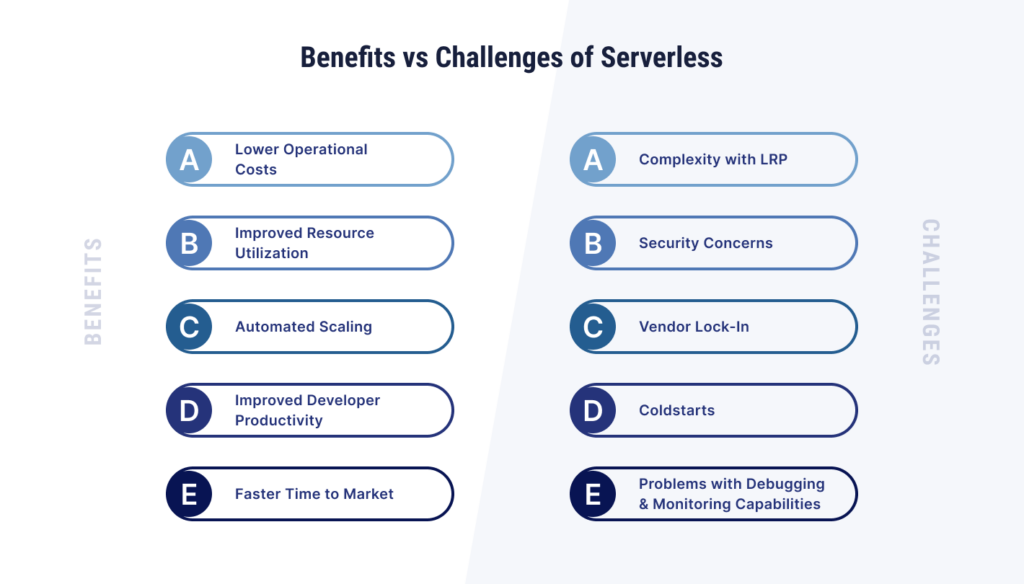

Benefits and Challenges of Serverless Computing

Serverless computing is a unique way to run applications, and it also comes with unique benefits and challenges.

Benefits of Serverless

- Lower Operational Costs: Because there’s no server management overhead, businesses can save money on infrastructure provisioning, scaling, and management. When the code is invoked, those are the resources you’ll pay to use.

- Improved Resource Utilization: Serverless computing eliminates the need to manage idle servers. Instead, resources are dynamically allocated and scaled up or down based on real-time application needs. This on-demand approach translates to significant cost savings since you’ll only pay for the resources you use, and the resources are also used more efficiently.

- Automated Scaling: Traffic spikes and dips can be accommodated easily through serverless architecture, improving performance without the need for manual action.

- Improved Developer Productivity: Without the complexities of managing a server, developers can focus on application-centric matters, such as core logic and improving development cycles.

- Faster Time to Market: Because serverless computing can speed up development cycles, it can also cut down on the time it takes to get a product to market.

Challenges of Serverless

- Complexity with Long-Running Processes (LRP): Serverless isn’t the best approach for long-running computations – applications that may run a process for hours or days. These can create complexities that serverless platforms can’t handle, due to potential inconsistencies in performance and higher costs to run the applications.

- Security Concerns: While serverless applications offer development and scalability benefits, they introduce unique security challenges including vulnerabilities in the code itself, unauthorized access to resources due to misconfigurations, and data privacy concerns. To mitigate these risks, developers must use strong security best practices, such as implementing proper authentication and authorization mechanisms, encrypting sensitive data, and regularly conducting security audits.

- Vendor Lock-In: It can be hard to move to another vendor later if businesses rely heavily on a certain cloud provider’s serverless platform. This may prevent you from getting the best pricing or service you could have.

- Cold Starts: Unlike traditional applications that are constantly running in the background, serverless functions spin up when needed. This can introduce a noticeable latency spike when a function is first invoked because the environment needs to be initialized. This initial delay can be a concern for applications where responsiveness is critical, such as real-time chat or mobile gaming.

- Problems with Debugging and Monitoring Capabilities: Traditional server environments are perfect for monitoring and debugging tools, but these tools may not work as well in serverless environments. So, transitioning to serverless often requires developers to embrace new tools and workflows specifically designed for this environment. These tools might include function-level monitoring solutions and cloud-native observability platforms that cater to serverless functions.

Use Cases for Serverless Computing

Serverless computing can be used in several scenarios as a flexible development model. Microservices and event-driven APIs are a perfect use case for serverless platforms. Data streams can also trigger serverless functions, allowing for real-time analytics and data processing. Internet of Things (IoT) devices, the back end of web and mobile applications, and content and delivery networks (CDN) can all use serverless platforms. Serverless functions can even be chained together to create a workflow, automating complex tasks with a series of events.

What Are Containers and How Do They Work?

If you’re looking to virtualize on the operating system (OS) level, containers may be the right fit. Containers are software units that can package together application code, libraries, and dependencies. These lightweight and portable units can run on the cloud, desktop, or a traditional IT framework. With containers, multiple copies of a single parent OS can be launched with a shared kernel, but unique file systems, memories, and applications.

Containers work by first creating a container image with code, configurations, and dependencies. This image is used to create a container instance when the application is run. Sometimes, multiple containers may need to operate together, which is where orchestration tools may play a role in ensuring that containers are started and stopped at the correct times.

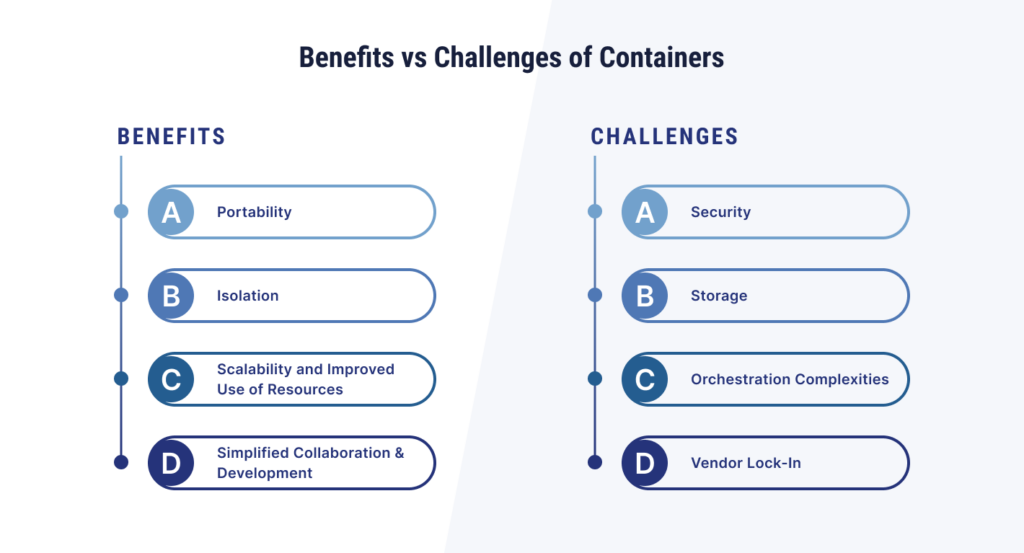

Benefits and Challenges of Containers

While containers can be lightweight, portable, and easy to scale, they can also come with challenges businesses should weigh before deciding to use them.

Benefits of Containers

- Portability: Containers can be seamlessly deployed across different environments because they can run well on any system that has a compatible container runtime (what loads and manages containers). This makes testing, development, and production easy.

- Isolation: Containers offer process-level, a powerful isolation feature. This means applications run in self-contained environments, unable to directly impact each other or the underlying host system. This isolation is crucial for security and stability as it prevents conflicts between applications and their dependencies.

- Scalability and Improved Use of Resources: Application demands can cause containers to scale up or down, improving resource allocation. Hardware is also utilized more effectively with containers since they share the host kernel with other containers.

- Simplified Collaboration and Development: Collaboration is improved with standardized container images. These consistent and collaborative environments also foster more efficient development lifecycles.

Challenges of Containers

- Security: Isolation can give containers a valuable security boost. However, security vulnerabilities can still exist in the container image or the runtime. With that, it’s crucial to implement strong security measures and regularly update container images to help decrease security risks.

- Storage: Resources can be optimized with containers, but if businesses aren’t careful, container deployments can pile up and result in more container images than are necessary. Storage management is critical.

- Orchestration Complexities: When you’re using multiple containers to pull off a complex deployment, it’s easier to manage each individual container, but arranging them together properly can pose more of a challenge.

- Vendor Lock-In: Like serverless computing, some containerization tools may only work with one cloud provider, leading to vendor lock-in. Look for vendor-neutral solutions if you are concerned about this.

Use Cases for Containers

Containers can also be used with microservices applications like serverless platforms, but they have many other use cases as well. Cloud-native development depends on containers, which can be used to scale seamlessly across cloud environments. Continuous integration and delivery (CI/CD) pipelines can be aided by containers, which offer consistent environments throughout the development lifecycle. Businesses can even choose to modernize legacy applications through containerization, removing a barrier to cloud migration. Using containerization is also appropriate for emerging technologies such as machine learning, high-performance computing, big data analytics, and software for IoT devices.

Serverless vs. Containers: Key Differences

While serverless and containers can be used in similar ways, there are some key differences between the two technologies.

Architectural Differences

With serverless, businesses don’t have to worry about server infrastructure, just the code. Containers are a self-contained unit that includes the application code, configuration, and dependencies. Developers retain some level of responsibility for managing underlying servers.

Scalability

While both serverless architecture and containers offer scalability, there’s a difference in how scaling is managed. Containers need to be scaled using orchestration tools like Kubernetes, which manage the deployment, scaling, and management of containerized applications across clusters of servers. In contrast, cloud providers handle the scaling automatically in serverless environments, abstracting away the underlying infrastructure management tasks from the developers.

Deployment, Management, and Maintenance

Businesses enjoy a simplified deployment process with serverless platforms. A cloud provider will handle the infrastructure updates and management. This is different from containerization, where developers will need to manage container images, orchestration, and servers.

Testing

Containers are easier to test, because they offer a more controlled environment that resembles production. Serverless configurations, on the other hand, can be harder to test because serverless functions are more ephemeral.

Lock-in and Portability

Lock-in is more of a problem with serverless compared to containers. Code is more likely to be specific to a certain cloud provider with serverless platforms. You can find open-source and vendor-agnostic container tools for vendor neutrality more easily.

Factors to Consider When Choosing Between Serverless vs. Containers

Because there are distinct differences between going serverless and using containers, businesses need to carefully evaluate their needs and capabilities to decide what will work best for them. You may want to consider the following when making your decision.

Application Requirements

Short-lived, event-driven tasks that have unpredictable traffic are perfect for serverless. Comparatively, containers work well for long-running processes and applications. Predictable workloads are better for containers, whereas automatic on-demand scaling is best for serverless.

Cost and Pricing Models

Serverless billing is a pay-per-use situation, which can be cost-effective for applications that experience sporadic traffic. If traffic is consistent at a certain level, especially a high level, containers may be better. Vendor lock-in can mean not being able to take advantage of competitive pricing models, which can be a bigger problem for serverless.

Development and Operational Complexities

Businesses looking for lower levels of complexity around server management will be happier with serverless environments. Containers require more configuration at the start and are more for businesses to manage on their own. Because serverless applications are more ephemeral, debugging and monitoring can be more complicated compared to containers.

In-House Development Expertise

You’ll also want to consider what your team can already do. If your team is well-versed in container orchestration, containers can be the way to go. If you’re looking for a shorter learning curve, serverless may be for you. However, both technologies can require additional training, as well as a working familiarity of cloud platforms.

Security

The shared responsibility explains who is responsible for the security of different components in an infrastructure. Businesses need to secure the code and data in serverless environments. Containers require businesses to secure the container image as well as the host environment. Cloud providers will patch infrastructure for clients in serverless environments, while businesses will need to scan and update container images themselves to combat vulnerabilities.

Integration with Current Infrastructure

If you’ve already invested in container orchestration or virtual machines, adding more containers can be a more efficient next step. However, if you haven’t invested in any new infrastructure, serverless can provide a much-needed jumpstart.

Can You Use Both Serverless and Containers?

When considering the merits and drawbacks of serverless computing and containers, you may think you have to pick one, but there are some scenarios where choosing a hybrid architecture may be more appropriate. For example, if you have event-driven tasks with fickle traffic, you can implement serverless functions to handle them. More predictable operations can be served by containers.

Choosing the Right Technology for Your Objectives

Once you thoroughly understand your project’s requirements, your existing resources, and your development team’s capabilities, you can select the technology that is right for you. Consider the workload type, scalability needs, security implications, budget, existing infrastructure, team skills, and ability to handle a learning curve in the decision-making process.

Need some support to make your decision? You don’t have to decide solo. Learn more about our IT advisory services and talk with a member of our team today.

FAQs

Virtual machines (VMs), containers, and serverless computing can all allow applications to run, but they each have their own characteristics in terms of virtualization. VMs virtualize the hardware layer of a computer system, containers virtualize the operating system (OS) layer, and serverless computing removes the need to manage servers completely.

Going serverless isn’t better or worse than using containers. The best choice will depend on your application’s needs. For example, if you value speed and agility more, going serverless may be the correct route, whereas containers will be better suited for applications that require a closer control over resources.