While high-performance computing (HPC) can bring benefits such as faster diagnostics in health care, rapid-fire financial simulations, enablement for autonomous vehicles and other success in various industries, high-performance workloads are also much more resource-intensive for data centers. When it comes to sustainable high performance computing, businesses need to learn how to strike a balance. If an organization is too focused on performance, it may miss out on opportunities to become more sustainable. Conversely, if they focus too much on sustainability, the user experience may suffer. Where’s the middle path? While it’s difficult for HPC to be completely sustainable, there are measures businesses can take to advance sustainability without compromising performance.

What is Sustainable High Performance Computing?

Sustainable HPC aims to deliver massive computational power synonymous with HPC systems while keeping an eye on the environmental impact of these systems. Instead of focusing solely on performance, sustainable HPC takes a multifaceted approach to the lifespan of the system, from the design of the infrastructure to how resources are used, and ultimately disposed of or recycled at the end of life.

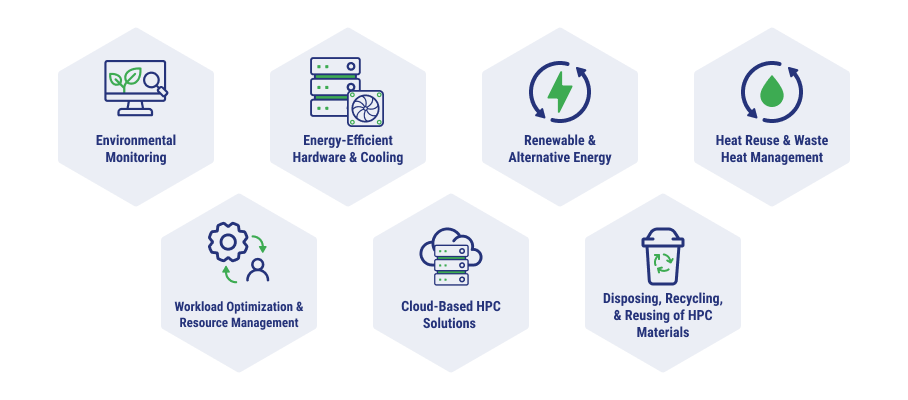

7 Ways to Advance Sustainable High Performance Computing

While sustainability and high-performance computing may not be entirely compatible, there are opportunities businesses can take to become more energy-efficient.

Leverage Environmental Monitoring

With environmental monitoring, businesses collect and look at all physical aspects of a data center environment, including humidity, temperature, airflow, and power consumption. This data can be used to identify opportunities for more energy-efficient configurations.

Energy-Efficient Hardware and Cooling Systems

Businesses can minimize energy consumption using energy-efficient hardware components and advanced cooling technologies. Liquid cooling in data centers is more efficient than air cooling because water absorbs and transfers heat more efficiently. This means components can remain cooler at higher loads using water or coolants instead of air. Exchanging hardware for more energy-efficient equipment can be as simple as buying something newer.

Renewable and Alternative Energy Integration

Depending on the data center your organization is using, the facility may integrate renewable energy sources into its power infrastructure, such as solar or wind power. These shifts can add up to big differences over time. In addition, some data centers are taking advantage of alternative energy sources like on-site fuel cells, which have much lower emissions than traditional sources of energy.

Heat Reuse and Waste Heat Management

Because HPC involves a lot of operating power, it also generates a lot of heat. Businesses can implement innovative solutions for reusing heat generated by HPC systems, including district heating or greenhouse climate control.

With district heating networks, captured heat is used to warm nearby buildings. This can lower your carbon footprint and reliance on fossil fuels. Hot water can be sent from a data center to other homes and buildings through insulated pipes.

Greenhouse climate control involves taking heat generated from HPC systems and sharing it with greenhouses to maintain ideal temperature and humidity levels for the plants inside. These are win-win solutions for data centers and surrounding communities.

Workload Optimization and Resource Management

Organizations can also minimize data center energy waste by efficiently utilizing resources and optimizing HPC workloads. Workload scheduling, load balancing, virtualization, and containerization can all aid in consolidating resources.

Scheduling tools can assign tasks to computing resources based on when the most efficient time would be to execute them. By waiting for other demanding tasks to be completed, scheduling HPC workloads can avoid unnecessary competition for resources. Load balancing takes workloads and distributes them evenly across available resources. By doing this, no one server is overloaded with work, and no servers are left idle.

Virtualization allows multiple virtual machines (VMs) to be run on one server. Each VM operates as its own device with an operating system and applications. This makes allocating resources to each workload more effective, and enables greater scalability for servers based on demand. Containerization can package and deploy applications using the shared operating system of a host machine, taking virtualization even further. Server utilization and resource allocation can be even more well-defined with containerization.

Cloud-Based HPC Solutions

Cloud-based HPC solutions can bring on-demand resource scaling, and potentially lower energy consumption, to your data center compared to on-premises deployment. These cloud-based services can allow a team to burst processing power without provisioning additional physical equipment. Teams won’t have to maintain underused hardware or worry about the energy costs associated with idle resources.

Responsible Disposal, Recycling, and Reuse of HPC Materials

The materials used in HPC systems can be hazardous if not disposed of properly. So, data centers should consider the system’s complete lifecycle and how to extend the lifespan of certain components.

Some manufacturers offer take back programs for electronic components, which can make recycling easier. Businesses may also want to consider partnering with specific electronics recyclers to form a regular practice around responsible disposal and recycling of HPC materials.

Upgrading certain components can be a cost-effective and resource-efficient method to extend your system’s lifespan. Refurbishing retired components or upgrading memory or storage can bring new life to your HPC data center, without replacing more resource-intensive parts of the environment.

Can Artificial Intelligence and Machine Learning Help Optimize Sustainable HPC?

Artificial intelligence and machine learning (AI/ML) workloads are often part of HPC systems. These technologies require a massive amount of resources, but they could also be used to reimagine data center sustainability.

By analyzing sensor data and system logs, AI and ML models can predict potential hardware failures or performance degradation in HPC systems. This enables proactive data center maintenance and preventive actions, reducing downtime and extending the lifespan of infrastructure.

AI/ML enhancements could also be used to monitor resources and identify usage trends to discover new efficiency opportunities. AI/ML tools can help integrate previously disparate workloads, finding efficiencies that decrease overall resource usage.

On the software level, AI/ML can help with data management and developer tools, plus allow for more efficient queueing. It’s easy to think of AI/ML as drains of energy, but they can be implemented in ways that are more sustainable. For example, one way businesses may be able to accomplish this is through the scaling of AI/ML workloads on high-bandwidth, low-latency GPUs that run jobs more quickly compared to CPUs, allowing for greater resource allocation for other compute tasks.

Embracing the Future of Sustainable High Performance Computing

As you begin to research and identify opportunities to help make your HPC initiatives more sustainable, TierPoint is here to help. Our team is familiar with finding a balance between delivering excellent performance while doing what is possible to help minimize the environmental impact of these workloads. Reach out today to learn more.

In the meantime, download our whitepaper to discover additional ways AI/ML can be used to improve business processes and operations.